Augmented reality startup Magic Leap made headlines last year when it raised over $540 million dollars in funding while still managing to hide its plans from public view.

In March of this year, they released a concept video created by VFX powerhouse Weta. While it tantalized viewers with its action-packed antics, it was a bit more fiction than science, presenting only a possible future, not actual output from the fabled contraption.

No strings attached (but probably lots of cables)

Well, apparently, things have changed.

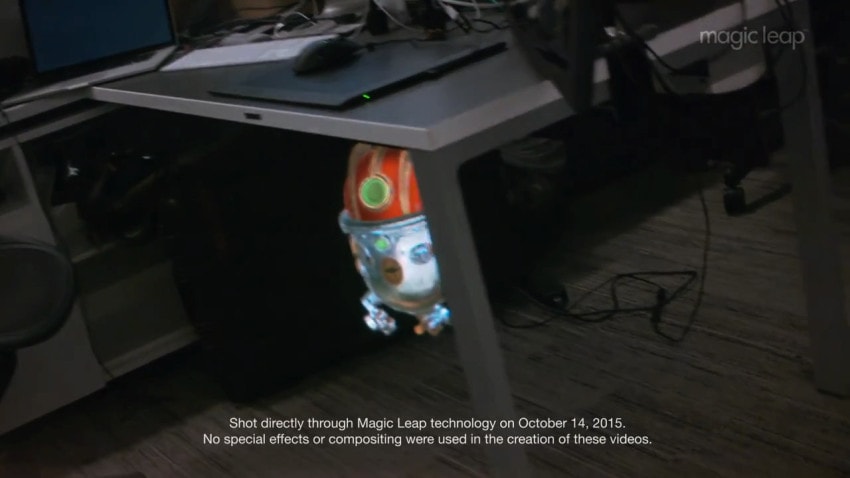

Witness the YouTube video above. Published by Magic Leap itself, the description promises it was “shot directly through Magic Leap technology on 10/14/15, without the use of special effects or compositing.” In other words, no Weta wizardry.

If you’re not impressed, let’s break it down real quick — because it’s definitely impressive.

1. Rack focus?

The opening shot starts on an office plant with a shallow depth of field. But as the camera pans up, the focus shifts to the character floating in the mid-ground.

This might not seem like a big deal, but to pull this off in real time suggests that Magic Leap is not only capable of understanding depth but also of matching the user’s focus. If true, that would be a huge breakthrough by itself.

We see more evidence of this focus-shifting in the solar system sequence:

2. Real-time occlusion

Towards the end of the robot shot, we see another impressive feat: real-time occlusion of a virtual object behind a physical object.

Real-time occlusion of a virtual object behind a physical object

In order to pull this off, Magic Leap had to scan the environment, build an accurate 3D model of it and then perform real-time 3D tracking and compositing. This is similar to some of the tech built into Microsoft Hololens and Google’s Project Tango. It’s the foundation for machine vision in 3D space, and it’s a sign that we do, in fact, live in the future.

Bonus points if someone can figure out how to sample lighting sources from the real world and use them for compositing purposes.

3. True opacity (?)

According to people I’ve talked to who’ve test-driven Microsoft’s Hololens, its virtual objects aren’t able to achieve 100% opacity — they’re translucent.

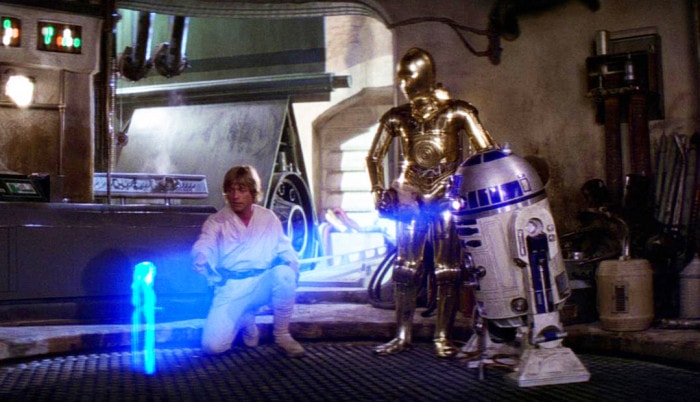

I call this the Princess Leia effect. It’s fine for movies, but in practice, it detracts from the believability of a mixed reality experience. In other words: not good.

“Help me, Obi-Wan Kenobi. You’re my only hope”

Magic Leap appears to have figured out a way around this problem.

The objects appear to have 100% opacity

Achieving true opacity is crucial for “tricking” the brain into thinking virtual objects are real. What remains to be seen, though, is if self-illumination is required for opacity. In other words, if the objects must be “lit up” in order to fully block out the scene behind them, that will pose a new obstacle.

The miniaturization problem

What you don’t see in the video above is the Magic Leap system itself, which is likely a huge, helmet-like contraption tethered to a beastly desktop machine. Miniaturizing such a system so that it can sit comfortably on the bridge of your nose is a massive engineering challenge.

IBM’s mighty Deep Blue (1997)

But, just to add some perspective, it’s worth considering that today’s smartphones are more powerful than supercomputers a scant 20 years ago. In 1997, IBM’s Deep Blue famously beat chess champion Garry Kasparov:

It boasted a performance figure of 11.38 GFLOPS and could evaluate 200 million positions on the chessboard each second. Today, some 17 years later, the ARM Mali-T628MP6 GPU inside the Exynos-based Samsung Galaxy S5 outputs 142 GFLOPS.

source: phoneArena

That’s roughly 12 times the raw computing power. In your pocket.

So don’t worry. We’ll get there.