Video game graphics have entered a new era of realism. With current console and PC technology, players can experience complete worlds with accurate landscapes, materials, and in-game characters. Much of this is down to the rapid advancement of real-time game engines, in particular, Epic Games’ Unreal Engine, which has drastically improved the realism of static characters.

However, it’s this same level of static realism that is shifting the player perception away from the image and shading and towards the animation quality. Photoreal characters can quickly become unconvincing when the facial and body movement animation is robotic and inauthentic. In fact, it’s a lack of authenticity that can hinder the end result of the in-game animation. But this is all changing with the adoption of performance capture driven pipelines.

Real Acting Performances

Performance capture is a term used to describe a full motion capture setup – this includes motion capture, facial capture, and audio recording. The purpose of performance capture is to track and record every element of an actor’s performance in a space and use that data to drive CG-animated characters in video games, films, and TV. Performance capture as a tool is nothing new to the entertainment industry and has been used in some capacity for a broad range of blockbuster films going back decades. The difference now is that the body and facial tracking technology is so accurate that digital characters can embody the life and feel of a real actor.

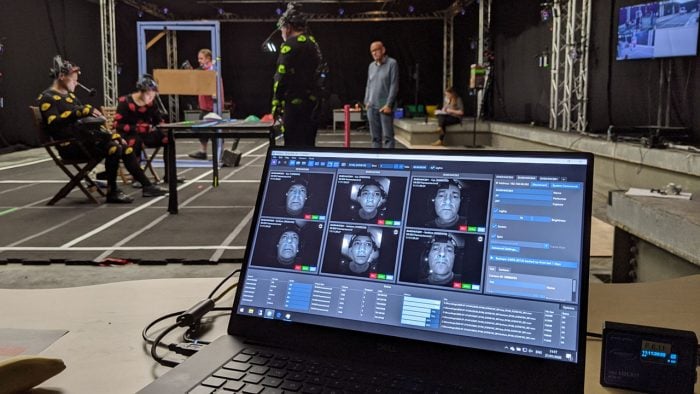

Full performance capture – mocap, facial capture and audio recording – was used for the recent game FI 2021 from Codemasters.

The key distinction between a traditionally animated in-game character and a character driven by performance capture data is the shift from focusing on post-production to pre. A traditional animation rig requires many static scans of the person and is designed with shapes that can be controlled by hand without any acting information from the actor. This process requires a high level of skill and is unavoidably time-consuming.

Performance capture eliminates the drawbacks of a traditional rig while simultaneously achieving a greater level of authenticity. If you take the human face, for example – the level of detail and nuance found in the emotion of someone’s performance can easily be overlooked, even by the greatest animators. Subtlety is often key in great acting performances, and cutting-edge facial capture technology can gather that information and translate it directly onto the performance of the animated character. In-game Digi doubles quickly become accurate representations of “real” acting.

Facial capture from a DI4D HMC was used in F1 2021 to drive the digital doubles of key characters in the game.

The Unreal Engine

Epic Games’ Unreal Engine is one of the driving forces behind the mass adoption of cutting-edge facial and motion capture technology. In terms of shaders, it’s incredibly realistic, allowing game and Motion Designers to craft dynamic environments, objects, and characters. Creatives want to use Unreal as an editing tool and play with camera shots, lighting, and more to get the most out of the scene. Rather than shooting from multiple cameras, you have a full 3D scene and can start cutting in 3D in real-time.

The reason this leads to the adoption of performance-driven animation and the creation of digi doubles is down to a shift in focus to direction. Unreal makes editing so much easier; animators can spend more time thinking about the subtleties of the scene. This also makes traditional animation less appealing – you can get such good data from performance capture shoots, less input is needed on the animation itself.

By lowering the level of interpretation and shifting to reproduction, typical video game projects requiring as much as 10 hours or more of animation can reach a much higher standard of realism. Additionally, as consoles and PC platforms continue to render things faster and faster, players begin to expect boundary-pushing realism. As soon as one developer raises the bar, others try to match it. For example, F1 2021 pushed the character animation in its story mode cutscenes to reach the level of realism already achieved in the racing simulation sequences.

A Convergence of Industries

One interesting side effect of a shift towards performance-driven animation and digi doubles is the opportunities created for professionals from different artistic backgrounds. When a full performance capture shoot is directed, the setup begins to transition into something traditionally expected by actors. The angle, direction, and sequences from the virtual camera are similar to something you might see on a television set. But for the actors, the performance capture stage becomes a theatre.

Using cutting-edge, head-mounted facial capture cameras, actors will perform together, acting out each shot in singular takes. Their performance takes place inside an entire 3D scene, rather than in front of a backdrop, so the level and type of acting start to return to something more organic. This is where an opportunity arises for directors and writers of all backgrounds.

Actors interact for an F1 2021 cutscene – the whole scene is captured in one performance.

With the technology in place, more time is spent on the creative direction and writing of a scene – post-production work becomes less integral to the project, resulting in a change in focus. Video game production companies will also need individuals that really understand character development and narratives, looking at scenes in a video game from an entirely different perspective.

An Industry Shift

The only hurdle left for a complete shift to performance-driven animation is a change in thinking. Some video game companies can be risk-averse and like to use familiar production pipelines. But as the Unreal Engine continues to drive the level of graphical realism to unprecedented heights, performance capture will become a necessity and not just an alternative.