Motivations

What was the main motivation behind the use of AI in POOF, and how did it influence the creative process?

The main motivation behind using AI in POOF came from a kind of “aha” moment I had back in the summer of 2022. That’s when I first discovered Midjourney, and honestly, I was instantly captivated. From that point on, I was inspired to dive deeper into using the latest video, image, and audio tools powered by AI in my creative process. In fact, POOF wouldn’t even exist without AI—it was inspired entirely by a single image I generated in Midjourney.

At the time, I was in a job that didn’t feel very fulfilling, so I channeled that feeling of being a bit lost and unmotivated. I mixed it with my love for all things Jim Henson and the kind of creativity his work represents. The blend of those feelings and inspirations became the spark for POOF, and AI really helped me shape that vision into something real.

What animation techniques and specific AI tools were used to create the visual and narrative style of POOF?

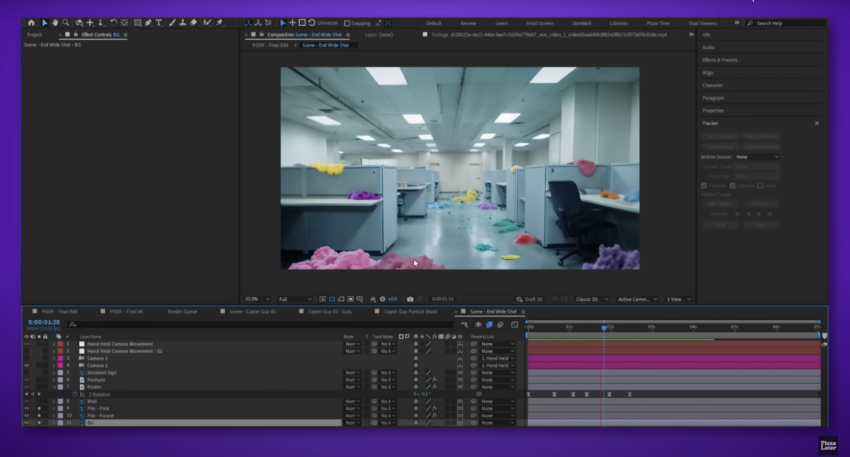

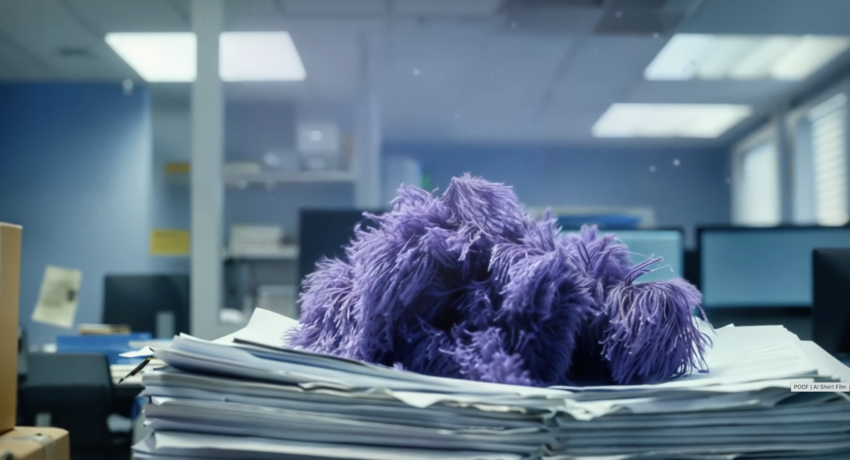

To create the visual and narrative style of POOF, I leaned heavily on AI tools to build out the animation and look of the film. All the images were generated in Midjourney, while I used Luma Labs’ Dream Machine for the video portions. Right now, AI video tools can be a bit tricky—especially for things like lip-syncing, which isn’t quite there yet. So, to work with these limitations, I decided to keep the characters quiet and use minimal movement. That way, I could avoid the weird, warped effects that AI can sometimes produce when it’s pushed too far with motion.

After setting up the base visuals with AI, I used some simple tricks in After Effects to bring the office scenes to life. Adding small, realistic details helped make the setting feel more believable, even with the limitations of current AI tools. So, the combination of Midjourney, Dream Machine, and some After Effects techniques allowed me to balance a unique visual style with the constraints of today’s AI technology.

How did the team manage collaboration between themselves and AI tools to maintain a balance between human creativity and automation?

When it comes to balancing human creativity with AI tools, I (the entire “team”) am constantly looking to push the boundaries of what AI can do, but in a way that still lets my own vision shine through. Too often, creators might fall into a routine of just generating assets, syncing them to music or voice, and putting them out on social media. For me, though, these platforms are more than just quick asset generators; they’re essential tools that I blend into my creative workflow.

Sometimes, that means generating hundreds of images until I find the one that perfectly fits the mood, shot, or scene I’m working on. AI tools are incredibly powerful, but they’re not always easy to shape into a finished product that’s visually consistent. That’s why I make a conscious effort not to rely on AI to spit out a fully completed piece. Instead, I use AI as one part of a bigger creative process. I want to keep the human touch and not lose sight of the story or mood I’m trying to convey. AI helps bring those elements to life, but the final vision still comes from me.

How was the main character developed, and what role did AI play in its design or animation?

POOF doesn’t exactly have a central character in the traditional sense, but the whole idea was inspired by a quirky character I rediscovered: “Flat Eric” from Mr. Oizo’s music videos like “Flat Beat.” Flat Eric’s simple, expressive design and minimalist movements brought a lot of personality to those videos, and I wanted to capture a similar vibe in POOF.

How were sound elements, music, and effects integrated to complement the immersive experience of AI?

What message or reflection about AI does the team hope viewers will take away from watching POOF?

Sound design is such a huge part of storytelling, and for POOF, it played an even bigger role because I had to work with some of the visual limits of AI. To really build the atmosphere of the office setting and capture the chaotic vibe, I focused heavily on the soundscape. I used Splice.com for all the sound effects, pulling together everything from background office noises to the sounds of the action unfolding. Then, I mixed it all in Adobe Audition to make sure each sound felt natural and immersive.

For the music, I turned to an AI music platform called Udio, which has become one of my favorites. I created the music track that plays over the end of the short film, aiming for a catchy, yacht-rock-inspired tune. The idea was to generate a song that could fit right into an elevator music playlist or play softly from a coworker’s cubicle radio—something familiar yet easygoing. This mix of AI-driven sound design and music helped bring POOF’s world to life and gave it a bit more depth, complementing the visuals and making the short feel more complete.

Exploring the creative process: questions for the visionaries behind Pizza Later

What is Pizza Later’s creative philosophy, and how is it reflected in your animation projects?

My creative philosophy is all about using AI as an extra tool to bring my ideas to life—it’s never meant to take over or become the only part of what I create. I think it’s important to keep a human touch in everything I make, and that’s what I aim for in each project. The constant changes in AI can make it tricky to stay on top of all the new tools and techniques, but I try to keep up so I can use AI in the best way possible without letting it overshadow my own vision.

At the end of the day, I want to make things that people can connect to and enjoy. Whether that means creating something relatable, funny, or just visually interesting, my goal is always to make sure my work feels human—even if I’ve had a little help from AI.

What key influences or inspirations are important in Pizza Later’s visual and narrative style?

I’m an ‘80s kid through and through, so a lot of my influences come from that era, especially the work of Jim Henson. I grew up watching a bootleg VHS copy of ‘Labyrinth’ until we wore it out, and that film—and Henson’s creations in general—left a huge mark on me. I still remember the glow of the TV static and the old HBO logo that played before the movies we recorded. There’s something magical about those moments that sticks with you.

Even though I’m now a creator working a lot with AI, I can’t help but miss the hands-on feel of practical effects and puppets. There’s a warmth and tangibility in those techniques that I try to bring into my own work, even if I’m using modern tools to get there. So while my projects might lean on AI for visuals, they’re always inspired by that nostalgic vibe—rooted in a love for the tactile, handmade quality of effects from decades ago.

How do you view the evolution of animation with AI, and what role do you think it will play in the future of your projects?

I’m really excited about the future of animation with AI and the creative potential it puts in my hands. Right now, I have a few ideas simmering in the back of my mind, and with the next generation of AI tools that are starting to emerge, I think I’ll be able to bring those ideas to life in ways I couldn’t have imagined before.

At the same time, I have mixed feelings about where AI might take media as a whole. While it’s an incredibly empowering tool, it also makes it easier for low-effort content to flood the space, which can be frustrating. But my focus is on the positive side: the fact that AI gives a voice to creators who might not have had access to traditional equipment or support. For people who always wanted to make a short film or a skit but didn’t have a camera or a team, AI opens doors to bring their ideas to life. I think that’s powerful, and it’s one of the things that keeps me motivated to keep exploring what AI can do.

What unique challenges have you faced in using AI in animation, and how did you overcome them?

One of the biggest challenges I’ve faced with using AI in animation is the visual limitations that come with AI-generated video. A lot of AI content has this noticeable warping effect, where movements or details don’t always look natural, and that can make it hard to achieve a polished final product. With POOF and future projects, I’m really pushing myself to go beyond the usual “AI look” and create something that is a bit more refined.

My goal is to elevate my work above the usual AI video output, so it feels less “janky” and more like a cohesive, well-crafted piece. It’s definitely a challenge, but overcoming those limits is part of what makes the process rewarding.

How is the team at Pizza Later structured, and how do you collaborate on experimental projects like this?

The “team” at Pizza Later is just me! I handle every part of the project myself, from concept to final editing. But I definitely don’t work in isolation—I have an amazing support group of other creators who help keep me inspired and give feedback along the way. They’re my go-to for bouncing ideas around and helping me make those tough editing decisions when I’m too close to the project to see it clearly. Big shout-out to the SVRG crew—you guys are the best!

What message do you hope to convey through your animation style, especially when experimenting with emerging technologies?

Through my creation process, especially when using emerging technologies like AI, I hope to show that these tools are just new additions to anyone’s creative toolkit. Right now, AI isn’t a magic button that instantly produces compelling stories or visuals—it’s not a one-stop solution for creating meaningful media. But in the hands of someone with a curious mind, AI can open up a world of possibilities.

I want people to see that AI can be used to bring unique ideas to life and add fresh elements to a project. It’s not about replacing creativity but enhancing it and exploring new directions. My hope is to inspire others to approach AI with an open mind, seeing it as a partner in the creative process rather than a replacement for their own ideas.