Virtual production has leapt from its initial basecamp of sports broadcasts and live concert events to the forefront of almost every discussion in the industry.

From The Mandalorian winning 14 Primetime Emmys; to Epic Games pushing the boundaries of real-time effects and animation with the Unreal Engine to the travel restrictions and sky-high insurance costs caused by the coronavirus pandemic, the production process has been changed forever. And as technological limitations diminish, the opportunity for every type of production to be enhanced grows.

But, what does ‘virtual production’ mean? And how do we get the most out of it? Consider this your introductory guide…

What is Virtual Production?

A combination of live-action footage with computer-generated graphics in real-time.

Typical use cases would be augmented reality, where the graphics are overlaid on top of the filmed images, or virtual studio productions where a green backing color is replaced with a computer-generated studio or location.

In recent times, the use of real-time computer-generated (CG) content displayed on giant LED walls instead of traditional green/blue screens, as we saw with the making of The Mandalorian, also falls under this term.

It’s also a new way of doing things, a mindset that incorporates new techniques unique to virtual production, not just the combination of different elements using technology.

It’s actually not new at all

Real-time virtual production has been around for more than 20 years, ever since filmmakers first began to experiment with VR to plan camera moves or superimpose a virtual world over live-action in real-time to give more information to the crew.

But it’s only in the last few years that the graphics quality has been good enough to really suspend the viewers’ disbelief, and the tools have become more widely available. Plus, the real world has been busy, too, generating a set of conditions that accelerated the industry’s desire to push things on.

Getting Unreal results

The idea behind Pixotope was to enable more productions to get up to speed faster and be creative very quickly because there is a blizzard of information out there in terms of how to start integrating virtual production into your workflows.

Getting more familiar with real-time engines is a good place to start.

We use Epic Games’ Unreal engine, a remarkable toolset for real-time graphics. To truly get the most out of it, it helps to have an artistic sense mixed with a fair bit of technical knowledge. For a skilled 3D artist, it shouldn’t take much time to get up to speed. For me, coming from the visual effects industry and having used most production tools out there, the transition was pretty straightforward.

For several years, we’ve focused on simplifying the workflows of studios wanting to use the Unreal Engine for virtual production.

Fix it in pre, not post

Virtual production is a completely different kind of workflow. Having real-time feedback on the day means that adjustments can be made and allow key departments to collaborate on creating the best image there and then. When you’re in the more linear workflow of traditional post, many important decision-makers may have already moved off the production onto new projects.

This does mean agreeing on more significant creative decisions in advance and the right assets being built and created upfront. All teams, from graphics to set decorators, need to be aligned earlier.

There are still some things that are more challenging for a real-time workflow, but it is catching up fast. The process of modeling and rigging still uses traditional tools, for example, as very advanced, organic rigs with procedural content or complex cloth simulations are still not available for real-time use, but will be in the not so distant future.

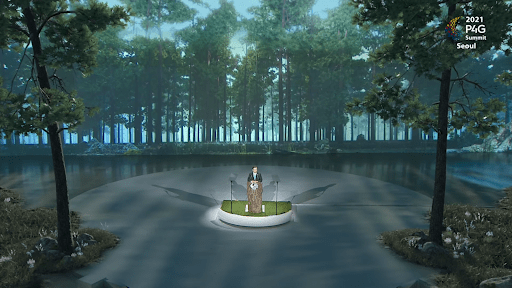

With virtual production, walls can also be windows

LED-wall workflows have gained traction during the last couple of years. Compared to the traditional use of green and blue screens, they allow the productions to view final content through the camera.

As an excellent by-product, they will give talent the impression of being on location and provide environmental lighting to the subjects. When connected to game engines and camera tracking systems, the LED walls become a window into the computer-generated environments. Viewed from the shooting camera, you get true depth and perspective into these environments that update in real-time as the camera moves.

Having the ability to move to any location, shoot a full day sunset, or move mountains instantly opens up many practical applications.

What are the other hallmarks of virtual production?

Camera tracking.

Having an absolute match of camera properties is essential to make sure graphics do not slip in any way. Aside from the obvious things like position and rotation and sensor of the camera, it is important to match the individual lens’ distortion and focus properties. The latter is usually done in a meticulous process of creating a lens profile. There are quite a few tracking vendors out there, so you must carefully evaluate the needs of the production.

Good news for storytellers

Virtual production is here to stay. It will not totally replace traditional workflows, but we will see it grow in prominence, and new technologies will open the door for more productions to adapt.

We’re already seeing huge sports events, the medical world, eSports tournaments, and news and weather broadcasts transformed – in a workflow and a creative sense – by virtual production.

What’s really exciting for storytellers and viewers is how virtual production techniques like real-time data input enhance viewer engagement and immersion in the event itself, taking viewership and participation to new heights.

As you can see, we’ve come a long way already, but we’re still only just finding out what’s possible.