It may take years or even decades before we see a broad, all-encompassing metaverse like in Ready Player One or even Facebook’s lavish demo. However, pixel by pixel, avatar by avatar (and eventually, world by world), the digital universe is starting to take shape around us.

Some describe the metaverse as the next great evolution of the internet, a place where drab websites and app interfaces become truly immersive environments. We already work, play, socialize, and shop online; now, what does that look like in 3D with loads of other users around?

While the metaverse may primarily be a digital construct, the shift to immersive 3D spaces inevitably means it will carry characteristics from the physical world. Even more interestingly, the metaverse has the potential to blur the lines between the two, whether it’s in look or feel–and truly, I mean feel.

Ultimately, motion capture technology may hold the key to not only bringing the metaverse to life at scale but doing so in a genuinely believable fashion.

Like the real thing

As virtual reality has evolved and improved over the years–becoming more technically advanced and immersive but also more accessible–we’ve seen how the barriers between digital and physical experiences can soften. With all the elements in sync and disbelief in suspension, a VR experience can feel nearly as genuine as the real thing.

The metaverse probably won’t be solely experienced in VR or even augmented reality (AR) headsets, which more literally straddle the lines between physical and digital imagery. Just as proto-metaverse games like Second Life, Fortnite, and Roblox exist on 2D screens, I imagine that future online worlds will similarly allow interaction via standard displays.

Even so, nailing the look and feel of a world will be paramount to delivering the illusion of reality, and that’s where capture technology will come into play. Motion capture will be essential for creating lifelike animation for characters and creatures in these virtual worlds so that players feel like they are interacting with real beings.

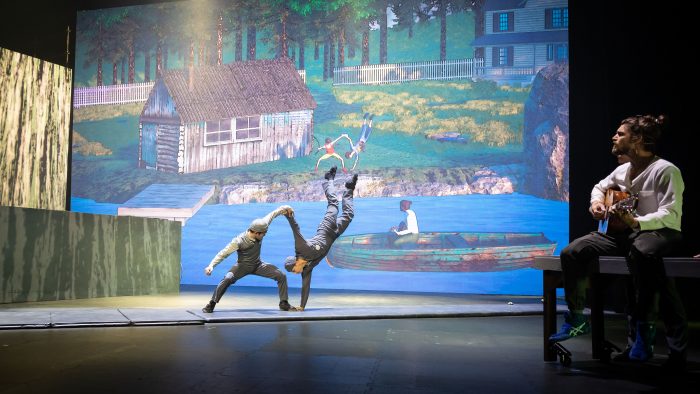

And it doesn’t just have to be canned, pre-recorded animation either. The metaverse opens the doors to new ways of live interactivity, where “characters” are being piloted by real people or users flock to concerts and performances with avatars controlled by their real-world counterparts. We’re already seeing the latter, in fact. For example, virtual entertainment company Wave put on a live Justin Bieber virtual concert experience in November 2021, with the pop sensation’s every move, word, and sung note translated to his lifelike avatar in real-time.

But it won’t just be people that need to look real and move accordingly. Increasingly, it’ll be the places that need to look like their real-world analogs to deliver powerfully immersive metaverse experiences. Luckily, the technology to bring objects and environments into the digital realm is constantly becoming more affordable, more accessible, and even more powerful over time.

LiDAR scanners, for example, have become drastically cheaper in recent years and make it possible to turn sizable real-world settings into digital worlds. Some can even autonomously stitch together rooms and spaces to recreate a place to the exacting pixel. Even today’s smartphones can capture real-world items and backdrops with surprising fidelity.

Granted, not every metaverse world has to be realistic. I foresee a wide array of online spaces to meet every mood and desire, including dazzling locales that are fantastical, surreal, and truly unlike anything seen in the physical world. Even so, capture technology can help ensure that those places have a smooth, polished feel and flow.

Gamers lived through years and years of janky 3D worlds and awkward cut-scenes as the technology matured, and we’ve all learned lessons from the early days of VR too. Stuttery graphics and unrefined environments can be a very quick turn-off in an immersive medium, and that’s sure to be the case in the metaverse too.

Feeling the metaverse

How the lines blur between the digital and physical in the metaverse may come from another surprising source: haptic technology.

It started with rumbling game controllers and booming gaming chairs and evolved into subtle dings from your Apple Watch, but haptic technology could take some very meaningful steps ahead as the metaverse takes shape.

We’re already seeing haptic gloves for VR headsets that let players not only feel what they’re touching in the digital world but also interact with it more naturally. Granted, these devices are both experimental and expensive, but they signal a potentially significant market for hardware that makes the digital world feel every bit like the real thing.

It probably won’t just be gloves, either. Imagine haptic vests or apparel that brings the sensations across your entire body. What if the metaverse could be enhanced with tastes and scents that scintillate your senses? I can’t wait to see the capture technology that makes those potential devices a reality for metaverse explorers strapped in at home.

That’s an elaborate vision of what might be possible on the high end of things, but I don’t believe that users will need thousands of dollars’ worth of hardware or elaborate setups to feel something when they enter the metaverse. And it will be communal experiences that help to make digital experiences feel real on all sorts of devices.

Wave’s metaverse concert with Justin Bieber was a key example of this. People all around the world could log on and access the live event, and they didn’t need hyper-expensive PCs or extensive tech know-how. But fans felt absolutely connected, not only to Justin but also to each other around the globe. It transcended the physical divide.

It affirmed to me that there will be many ways to not only connect digital metaverse worlds to physical environments but also physical sensations. Granted, it’s super early days for the metaverse, but through early experimentation with motion capture tech, we’re already seeing how it can be empowering and accessible for all sorts of users.